【Microsoft】AI+ Era The Future for Artificial Intelligence and its Role In Society

Today, we are entering the era of the fourth industrial evolution. Artificial intelligence, automatic driving, IOT, mixed reality, etc. become the focus of this revolution. In this era, the world's data will double every 18 months. It is expected that by 2020, the global data will reach 40ZB (equivalent to 4 trillion GB), and technology will become more intuitive, able to communicate with people in a variety of ways, and become “smarter” – able to learn independently through experience just as humans do. Businesses can better understand and serve customers in a way that they never imagined and ultimately solve the toughest challenges in the world.

Throughout the history of human innovation, automobiles, printing, steam engines, manned flights, rockets and satellites ... They are all the accelerators that drive the rapid development of mankind in the vast expanse of fields. This time, artificial intelligence will become the largest accelerator. Mainframes, PCs, internet, mobile phones, clouds ... All this is the prelude of artificial intelligence. It not only extends human ability, but also makes our brainpower stronger; it is not only a progress in the digital age, but also a technological prophet, including Turing, who dreamed of such ultimate goal before the birth of the computer.

In the summer of 1956, a team of researchers at Dartmouth College met to explore the development of computer systems capable of learning from experience, much as people do. But, even this seminal moment in the development of AI was preceded by more than a decade of exploration of the notion of machine intelligence, exemplified by Alan Turing’s quintessential test: a machine could be considered “intelligent” if a person interacting with it (by text in those days) could not tell whether it was a human or a computer.

Researchers have been advancing the state of the art in AI in the decades since the Dartmouth conference. Developments in subdisciplines such as machine vision, natural language understanding, reasoning, planning and robotics have produced an ongoing stream of innovations, many of which have already become part of our daily lives. Route-planning features in navigation systems, search engines that retrieve and rank content from the vast amounts of information on the internet, and machine vision capabilities that enable postal services to automatically recognize and route handwritten addresses are all enabled by AI.

We think of AI as a set of technologies that enable computers to perceive, learn, reason and assist in decision-making to solve problems in ways that are similar to what people do. With these capabilities, how computers understand and interact with the world is beginning to feel far more natural and responsive than in the past, when computers could only follow pre-programmed routines.

Not so long ago we interacted with computers via a command line interface. And while the graphical user interface was an important step forward, we will soon be routinely interacting with computers just by talking to them, just as we would to a person. To enable these new capabilities, we are, in effect, teaching computers to see, hear, understand and reason.1 Key technologies include:

Vision: the ability of computers to “see” by recognizing what is in a picture or video.

Speech: the ability of computers to “listen” by understanding the words that people say and to transcribe them into text.

Language: the ability of computers to “comprehend” the meaning of the words, taking into account the many nuances and complexities of language (such as slang and idiomatic expressions).

Knowledge: the ability of a computer to “reason” by understanding the

relationship between people, things, places, events and the like. For instance,

when a search result for a movie provides information about the cast and other

movies those actors were in, or at work when you participate in a meeting and

the last several documents that you shared with the person you’re meeting with

are automatically delivered to you. These are examples of a computer reasoning

by drawing conclusions about information.

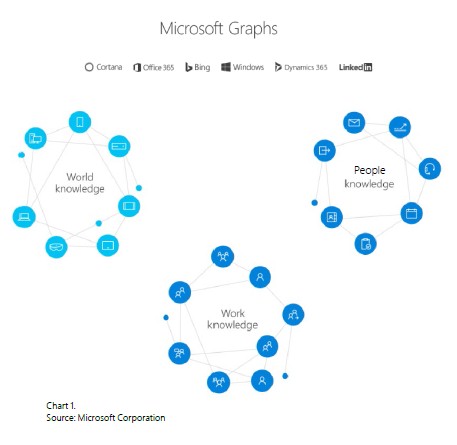

Computers are learning the way people do; namely, through experience. For computers, experience is captured in the form of data. In predicting how bad traffic will be, for example, computers draw upon data regarding historical traffic flows based on the time of day, seasonal variations, the weather, and major events in the area such as concerts or sporting events. More broadly, rich “graphs” of information are foundational to enabling computers to develop an understanding of relevant relationships and interactions between people, entities and events. In developing AI systems, Microsoft is drawing upon graphs of information that include knowledge about the world, about work and about people.

Thanks in part to the availability of much more data, researchers have made important strides in these technologies in the past few years. In 2015, researchers at Microsoft announced that they had taught computers to identify objects in a photograph or video as accurately as people do in a test using the standard ImageNet 1K database of images.2 In 2017, Microsoft’s researchers announced they had developed a speech recognition system that understood spoken words as accurately as a team of professional transcribers, with an error rate of just 5.1 percent using the standard Switchboard dataset. In essence, AI-enhanced computers can, in most cases, see and hear as accurately as humans. Recently, Microsoft Research announced that their machine translation system achieved human parity on commonly used test set of news stories, called newstest2017. Machine translation is one of the most challenging tasks of natural language processing.